# GCP 쿠버네티스 설치

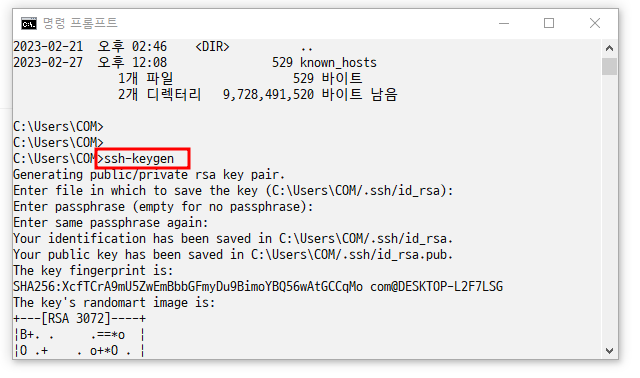

- ssh-keygen 키페어 생성

- dir .ssh로 키 확인

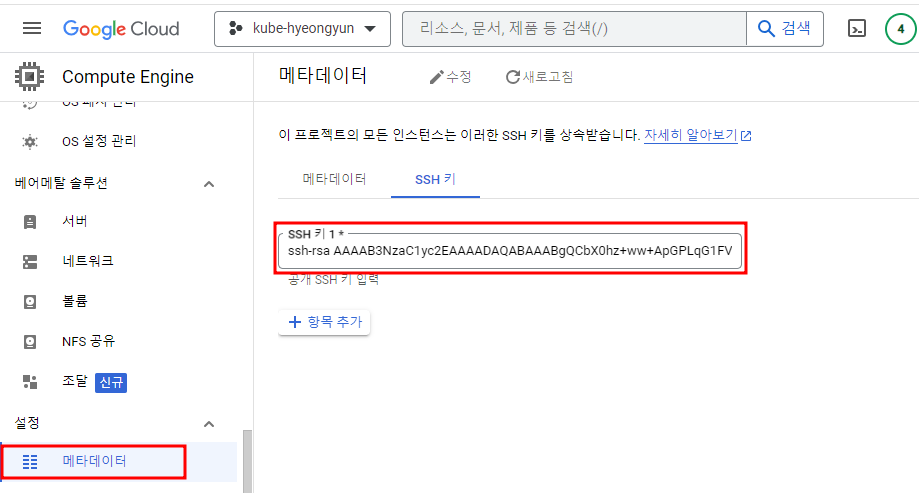

- 메모장으로 id_rsa.pub 파일을 열고

- gcp에서 키 항목에 추가

## Multi-Node 설치

--- All Node ---

# cat <<EOF >> /etc/hosts

10.178.0.9 master1

10.178.0.8 worker01

10.178.0.7 worker2

EOF

# hostnamectl set-hostname master

# curl https://download.docker.com/linux/centos/docker-ce.repo -o /etc/yum.repos.d/docker-ce.repo

# sed -i -e "s/enabled=1/enabled=0/g" /etc/yum.repos.d/docker-ce.repo

# yum --enablerepo=docker-ce-stable -y install docker-ce-19.03.15-3.el7

# mkdir /etc/docker

# cat <<EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"],

"log-driver": "json-file",

"log-opts": {

"max-size": "100m"

},

"storage-driver": "overlay2"

}

EOF

# systemctl enable --now docker

# systemctl daemon-reload

# systemctl restart docker

# systemctl disable --now firewalld

# setenforce 0

# sed -i 's/^SELINUX=enforcing$/SELINUX=disabled/' /etc/selinux/config

# swapoff -a # free -h에서 swap을 확인하고 swap 메모리를 죽여야 쿠버네티스가 설치된다.

# sed -i '/ swap / s/^/#/' /etc/fstab

# 쿠버네티스가 pod안쪽까지 통신할 수 있도록 iptabels 설정

# cat <<EOF > /etc/sysctl.d/k8s.conf # kubernetes

net.bridge.bridge-nf-call-ip6tables = 1

net.bridge.bridge-nf-call-iptables = 1

EOF

# sysctl --system # 설정한 파일 모두 적용

# reboot

--- Kubeadm 설치 (Multi Node: Master Node, Worker Node)

# cat <<'EOF' > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl= https://packages.cloud.google.com/yum/repos/kubernetes-el7-$basearch

enabled=1

gpgcheck=0

repo_gpgcheck=0

gpgkey=https://packages.cloud.google.com/yum/doc/yum-key.gpghttps://packages.cloud.google.com/yum/doc/rpm-package-key.gpg

EOF

# kublet은 모든 노드에 다 있어야한다. kubernetes가 kubelet에 있는 이미지를 활용

# kubeadm은 kubemini 같은 어플리케이션

# yum -y install kubeadm-1.19.16-0 kubelet-1.19.16-0 kubectl-1.19.16-0 --disableexcludes=kubernetes

# systemctl enable kubelet # kubelet 데몬 실행이 안되기 때문에 enable을 한다.

# poweroff

--- Master ---

# kubeadm init --apiserver-advertise-address=10.178.0.9 --pod-network-cidr=10.244.0.0/16

# mkdir -p $HOME/.kube

# cp -i /etc/kubernetes/admin.conf $HOME/.kube/config

# chown $(id -u):$(id -g) $HOME/.kube/config

# kubectl apply -f https://raw.githubusercontent.com/flannel-io/flannel/master/Documentation/kube-flannel.yml

# kubectl get pods --all-namespaces

# source <(kubectl completion bash) # 자동완성 기능 활성화

# yum install -y bash-completion

# echo "source <(kubectl completion bash)" >> ~/.bashrc

# exit

--- Node ---

# kubeadm join 172.25.0.149:6443 --token r47zye.ye93ffmcm52769a9 \

--discovery-token-ca-cert-hash sha256:50670fd667c74b723028c7a09dc40de3b0bd1b448a271947853689e32222909f

# kubeadm join 192.168.2.105:6443 --token 0pn2n9.s5qt8b6w9ysoovx5 \

--discovery-token-ca-cert-hash sha256:0316ed88b0fb8f63c465930b554d7532ef713df06470375e7ee17728b33a5a31

# kubectl get nodes* [ERROR FileContent--proc-sys-net-ipv4-ip_forward]: /proc/sys/net/ipv4/ip_forward contents are not set to 1 : 장애 발생

- 해결법

-- /etc/sysctl.conf를 열어 net.ipv4.ip_forward=1을 추가

-- 마지막에 sysctl -p

-- 잘못 설치시에는 kubeadm reset 활용

# GCP metallb, Deployment, ScaleOut/In, 롤링업데이트

** metallb 세팅

# cd ~

# git clone https://github.com/hali-linux/_Book_k8sInfra.git** bitnami 이미지 가져오기

# vi /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

//bitnami/metallb-speaker:0.9.3

///bitnami/metallb-controller:0.9.3

--- /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml -> 이미지 수정

kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.4/metallb.yaml

kubectl get pods -n metallb-system -o wide# vi metallb-l2config.yaml

apiVersion: v1

kind: ConfigMap

metadata:

namespace: metallb-system

name: config

data:

config: |

address-pools:

- name: nginx-ip-range

protocol: layer2

addresses:

- 10.178.0.9/32

- 10.178.0.8/32

- 10.178.0.7/32

# kubectl apply -f metallb-l2config.yaml

# kubectl describe configmaps -n metallb-system

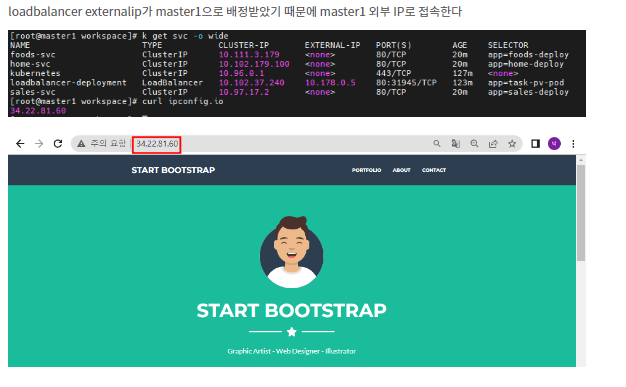

- GCP는 내부 IP로 설정하여 할당하지만 접속은 외부 IP로 한다.

#. Deployment

# vi deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx-deployment

template:

metadata:

name: nginx-deployment

labels:

app: nginx-deployment

spec:

containers:

- name: nginx-deployment-container

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-deployment

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.0.143

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

targetPort: 80- Deployment와 loadbalancer 서비스 동시 생성

# kubectl apply -f deployment.yaml

# kubectl get deployments.apps -o wide

# kubectl describe deployments.apps nginx-deployment

## curl ipconfig.io # masterip 외부IP가 나온다

- 아이피로 접근

## ScaleOut

k scale --current-replicas=3 --replicas=10 deployment.apps/nginx-deployment

k get -o wide## ScaleIn

k scale --current-replicas=10 --replicas=4 deployment.apps/nginx-deployment

k get po -o wide

# 롤링업데이트

- kubectl set image deployment.apps/nginx-deployment nginx-deployment-container=hyeongyun/web-site:food

※ bluegreen은 자원이 더 들어간다

※롤링 업데이트는 사용자가 바뀌는 걸 본다

- 이미지를 정상적으로 바꿨지만 계속해서 nginx 페이지가 나타났다

- k edit svc loadbalancer-service-pod

- 서비스의 selector가 nginx-pod를 가리키고 있었기 때문에 nginx페이지가 나타났다

- selector 부분을 deployment pod로 변경해서 정상적으로 food 페이지가 나타나도록 만들었다.

# Multi pod,

# vi multipod.yaml

apiVersion: v1

kind: Pod

metadata:

name: multipod

spec:

containers:

- name: nginx-container #1번째 컨테이너

image: nginx:1.14

ports:

- containerPort: 80

- name: centos-container #2번째 컨테이너

image: centos:7

command:

- sleep

- "10000"# Ingress

- in 도커 서버

docker tag 192.168.1.148:5000/test-home:v0.0 hyeongyun/test-home:v0.0

docker tag 192.168.1.148:5000/test-home:v1.0 hyeongyun/test-home:v1.0

docker tag 192.168.1.148:5000/test-home:v2.0 hyeongyun/test-home:v2.0

docker push hyeongyun/test-home:v0.0

docker push hyeongyun/test-home:v1.0

docker push hyeongyun/test-home:v2.0

- 이미지 버전대로 push 하기

## ingress 설치

git clone https://github.com/hali-linux/_Book_k8sInfra.git

kubectl apply -f /root/_Book_k8sInfra/ch3/3.3.2/ingress-nginx.yaml

kubectl get pods -n ingress-nginx

mkdir ingress && cd $_# vi ingress-deploy.yaml

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: foods-deploy

spec:

replicas: 1

selector:

matchLabels:

app: foods-deploy

template:

metadata:

labels:

app: foods-deploy

spec:

containers:

- name: foods-deploy

image: 192.168.1.148:5000/test-home:v1.0

---

apiVersion: v1

kind: Service

metadata:

name: foods-svc

spec:

type: ClusterIP

selector:

app: foods-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: sales-deploy

spec:

replicas: 1

selector:

matchLabels:

app: sales-deploy

template:

metadata:

labels:

app: sales-deploy

spec:

containers:

- name: sales-deploy

image: 192.168.1.148:5000/test-home:v2.0

---

apiVersion: v1

kind: Service

metadata:

name: sales-svc

spec:

type: ClusterIP

selector:

app: sales-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: home-deploy

spec:

replicas: 1

selector:

matchLabels:

app: home-deploy

template:

metadata:

labels:

app: home-deploy

spec:

containers:

- name: home-deploy

image: 192.168.1.148:5000/test-home:v0.0

---

apiVersion: v1

kind: Service

metadata:

name: home-svc

spec:

type: ClusterIP

selector:

app: home-deploy

ports:

- protocol: TCP

port: 80

targetPort: 80# kubectl apply -f ingress-deploy.yaml

# kubectl get all

# vi ingress-config.yaml

apiVersion: networking.k8s.io/v1beta1

kind: Ingress

metadata:

name: ingress-nginx

annotations:

nginx.ingress.kubernetes.io/rewrite-target: /

spec:

rules:

- http:

paths:

- path: /foods

backend:

serviceName: foods-svc

servicePort: 80

- path: /sales

backend:

serviceName: sales-svc

servicePort: 80

- path:

backend:

serviceName: home-svc

servicePort: 80# kubectl apply -f ingress-config.yaml

# vi ingress-service.yaml

apiVersion: v1

kind: Service

metadata:

name: nginx-ingress-controller

namespace: ingress-nginx

spec:

ports:

- name: http

protocol: TCP

port: 80

targetPort: 80

- name: https

protocol: TCP

port: 443

targetPort: 443

selector:

app.kubernetes.io/name: ingress-nginx # annotation지정으로 만들어진 컨트롤러 역할을하는 nginx pod에 연결

type: LoadBalancer

# externalIPs:

# - 192.168.2.105# kubectl apply -f ingress-service.yaml

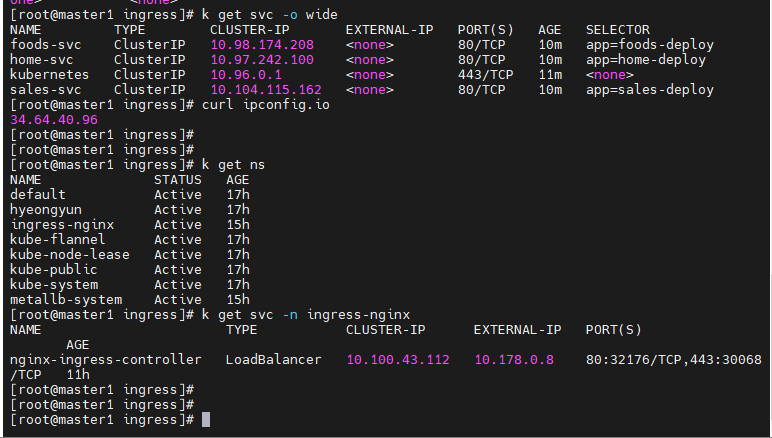

- 경로 기반 라우팅

## 에러 사항

- 계속해서 default namespace에서 서비스를 찾았지만 ingress-nginx 서비스를 찾을 수 없었다.

- 따라서 curl ipconfig.io로 나오는 주소로 접근해도 원하는 페이지를 얻을 수 없었다

- k get svc-n ingress-nginx 명령어로 ingress-nginx namespace안에 있는 LoadBalancer 서비스를 찾아서 서비스가 위치한 node의 Public IP(GCP)로 접근 하였더니 페이지가 정상적으로 나왔다.

# PV, PVC

# vi pv-pvc-pod.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: task-pv-volume

labels:

type: local

spec:

storageClassName: manual

capacity:

storage: 10Mi

accessModes:

- ReadWriteOnce

hostPath:

path: "/mnt/data"

---

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: task-pv-claim

spec:

storageClassName: manual

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 10Mi

selector:

matchLabels:

type: local

---

apiVersion: v1

kind: Pod

metadata:

name: task-pv-pod

labels:

app: task-pv-pod

spec:

volumes:

- name: task-pv-storage

persistentVolumeClaim:

claimName: task-pv-claim

containers:

- name: task-pv-container

image: nginx

ports:

- containerPort: 80

name: "http-server"

volumeMounts:

- mountPath: "/usr/share/nginx/html"

name: task-pv-storage# k apply -f pv-pvc-pod.yaml

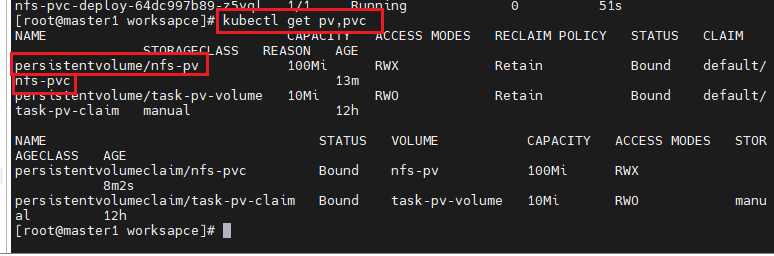

# k get pv,pvc

# k get pod -o wide

# pod가 worker2에 만들어졌다

worker2 세션에서

# ls /mnt/data/ 가 자동완성된다. 원래 없는데 pv,pvc가 만들어지면서 만들어진 경로

홈페이지로 쓸 tar파일 업로드

# tar xvf /home/com/gcp.tar -C /mnt/data

# k get svc -o wide

## k edit svc loadbalancer-deployment

apiVersion: v1

kind: Service

metadata:

annotations:

kubectl.kubernetes.io/last-applied-configuration: |

{"apiVersion":"v1","kind":"Service","metadata":{"annotations":{},"name":"loadbalancer-deployment","namespace":"default"},"spec":{"ports":[{"port":80,"protocol":"TCP","targetPort":80}],"selector":{"app":"nginx-deployment"},"type":"LoadBalancer"}}

creationTimestamp: "2023-06-08T06:36:17Z"

name: loadbalancer-deployment

namespace: default

resourceVersion: "7071"

selfLink: /api/v1/namespaces/default/services/loadbalancer-deployment

uid: 6fb69d55-4157-456f-bb91-950bb8fda581

spec:

clusterIP: 10.102.37.240

externalTrafficPolicy: Cluster

ports:

- nodePort: 31945

port: 80

protocol: TCP

targetPort: 80

selector:

app: task-pv-pod

sessionAffinity: None

type: LoadBalancer

status:

loadBalancer:

ingress:

- ip: 10.178.0.5

# nfs

## 그라파나 설치

--- metric-server

kubectl apply -f https://github.com/kubernetes-sigs/metrics-server/releases/download/v0.6.1/components.yaml

kubectl edit deployments.apps -n kube-system metrics-server

# --kubelet-insecure-tls

kubectl top node

kubectl top pod

- k top node로 각 노드의 CPU와 메모리 사용량 확인

## nfs 설치

- GCP 네트워크에서 리전의 대역대를 찾는다(서울)

- nfs

yum install -y nfs-utils.x86_64

mkdir /nfs_shared

chmod 777 /nfs_shared/

echo '/nfs_shared 10.178.0.0/20(rw,sync,no_root_squash)' >> /etc/exports

systemctl enable --now nfs### vi nfs-pv.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: nfs-pv

spec:

capacity:

storage: 100Mi

accessModes:

- ReadWriteMany # 가져오기만 할 수 있다(수정x)

persistentVolumeReclaimPolicy: Retain

nfs:

server: 10.178.0.9

path: /nfs_shared- server의 IP주소를 master노드의 주소로 설정한다(nfs가 위치할 노드를 표시)

# kubectl apply -f nfs-pv.yaml

# kubectl get pv

### vi nfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: nfs-pvc

spec:

accessModes: # pv와 접근 모드가 같으면 pv와 연결되는, 마치 selector처럼 동작한다.

- ReadWriteMany

resources:

requests:

storage: 10Mi# kubectl apply -f nfs-pvc.yaml

# kubectl get pv,pvc

### vi nfs-pvc-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nfs-pvc-deploy

spec:

replicas: 4

selector:

matchLabels:

app: nfs-pvc-deploy

template:

metadata:

labels:

app: nfs-pvc-deploy

spec:

containers:

- name: nginx

image: nginx

volumeMounts:

- name: nfs-vol

mountPath: /usr/share/nginx/html

volumes:

- name: nfs-vol

persistentVolumeClaim:

claimName: nfs-pvc# kubectl apply -f nfs-pvc-deploy.yaml

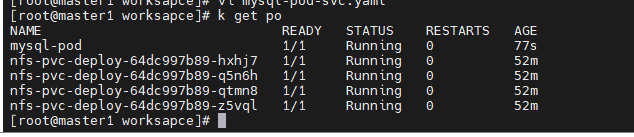

# kubectl get pod

# kubectl expose deployment nfs-pvc-deploy --type=LoadBalancer --name=nfs-pvc-deploy-svc1 --port=80

-> 서비스 배포

- /nfs_shared/ 경로에 gcp.tar 파일을 풀어준다.

- curl ipconfig.io로 현재 노드 확인 후 접근

# ConfigMap

* 컨피그맵은 키-값 쌍으로 기밀이 아닌 데이터를 저장하는 데 사용하는 API 오브젝트입니다.

## vi configmap-dev.yaml

apiVersion: v1

kind: ConfigMap

metadata:

name: config-dev

namespace: default

data:

DB_URL: localhost

DB_USER: myuser

DB_PASS: mypass

DEBUG_INFO: debug# k apply -f configmap-dev.yaml

# kubectl describe configmaps config-dev

### vi configmap-wordpress.yaml

- 민감한 패스워드나 유저 이름 등은 지워주었다

- 민감한 정보는 secret.yaml에 따로 보관

# kubectl apply -f configmap-wordpress.yaml

# kubectl describe configmaps config-wordpress

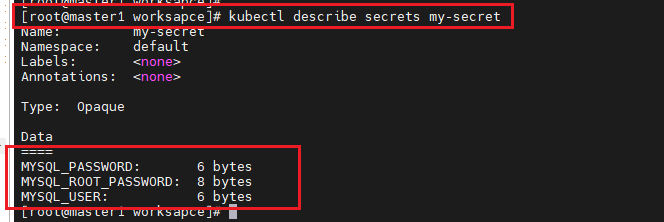

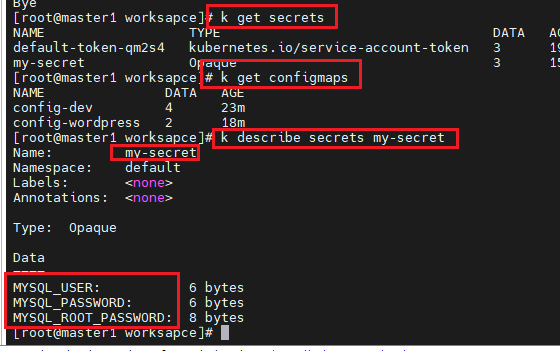

### vi secret.yaml # 민감한 정보를 넣어주는 파일

apiVersion: v1

kind: Secret

metadata:

name: my-secret

stringData:

MYSQL_ROOT_PASSWORD: mode1752

MYSQL_USER: wpuser

MYSQL_PASSWORD: wppass

# kubectl apply -f secret.yaml

# kubectl describe secrets my-secret

### vi mysql-pod-svc.yaml

apiVersion: v1

kind: Pod

metadata:

name: mysql-pod

labels:

app: mysql-pod

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

- secretRef: # secret.yaml의 정보를 가져온다

name: my-secret

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-pod

ports:

- protocol: TCP

port: 3306

targetPort: 3306

# k apply -f mysql-pod-svc.yaml

# k get pod

### vi wordpress-pod-svc.yaml

- k describe secrets my-secret 명령어를 통해서 환경변수를 확인하고 wordpress-deploy-svc.yaml 파일을 수정한다.

apiVersion: v1

kind: Pod

metadata:

name: wordpress-pod

labels:

app: wordpress-pod

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: my-secret

key: MYSQL_USER

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: my-secret

key: MYSQL_PASSWORD

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.56.103

selector:

app: wordpress-pod

ports:

- protocol: TCP

port: 80

targetPort: 80# k get po -o wide

### vi mysql-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: mysql-deploy

labels:

app: mysql-deploy

spec:

replicas: 1

selector:

matchLabels:

app: mysql-deploy

template:

metadata:

labels:

app: mysql-deploy

spec:

containers:

- name: mysql-container

image: mysql:5.7

envFrom:

- configMapRef:

name: config-wordpress

- secretRef:

name: my-secret

ports:

- containerPort: 3306

---

apiVersion: v1

kind: Service

metadata:

name: mysql-svc

spec:

type: ClusterIP

selector:

app: mysql-deploy

ports:

- protocol: TCP

port: 3306

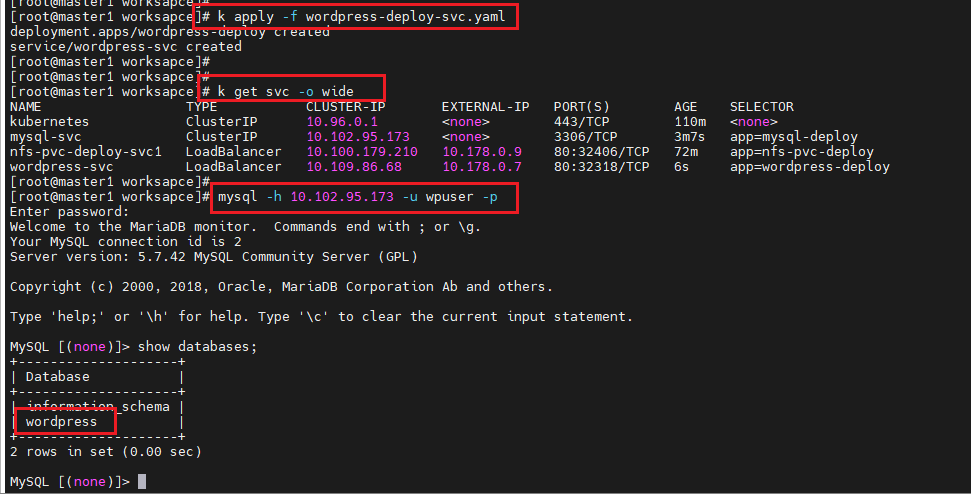

targetPort: 3306# k apply -f mysql-deploy-svc.yaml

# k get svc -o wide

### vi wordpress-deploy-svc.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: wordpress-deploy

labels:

app: wordpress-deploy

spec:

replicas: 1

selector:

matchLabels:

app: wordpress-deploy

template:

metadata:

labels:

app: wordpress-deploy

spec:

containers:

- name: wordpress-container

image: wordpress

env:

- name: WORDPRESS_DB_HOST

value: mysql-svc:3306

- name: WORDPRESS_DB_USER

valueFrom:

secretKeyRef:

name: my-secret

key: MYSQL_USER # wpuser

- name: WORDPRESS_DB_PASSWORD

valueFrom:

secretKeyRef:

name: my-secret

key: MYSQL_PASSWORD # wppass

- name: WORDPRESS_DB_NAME

valueFrom:

configMapKeyRef:

name: config-wordpress

key: MYSQL_DATABASE

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: wordpress-svc

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.1.102

selector:

app: wordpress-deploy

ports:

- protocol: TCP

port: 80

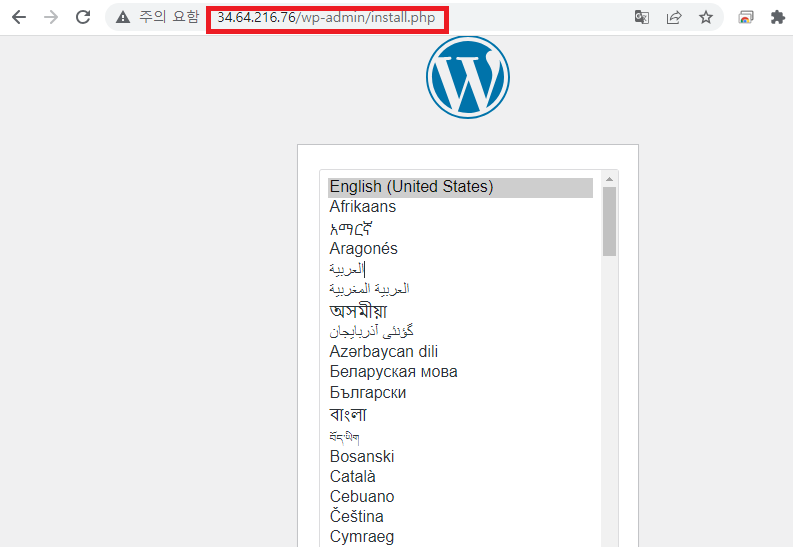

targetPort: 80# k apply -f wordpress-deploy-svc.yaml

# k get svc -o wide

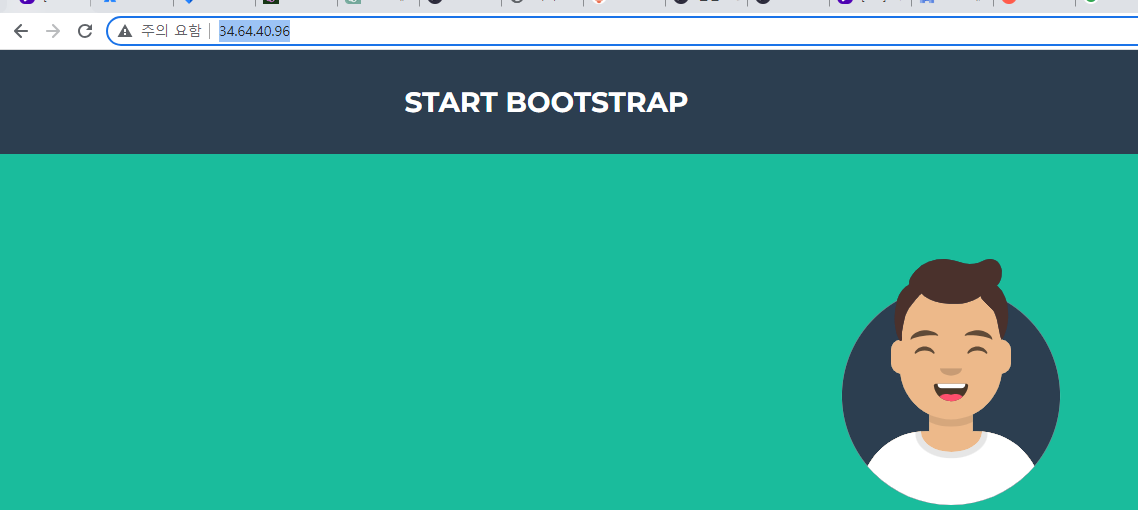

- 서비스가 만들어지 노드의 퍼블릭 ip로 접근(curl ipconfig.io)

# ResourceQuota

- k create ns hyeongyun : namespace 생성

### vi sample-resourcequota.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota

namespace: hyeongyun # namespace 변경

spec:

hard:

count/pods: 5# k apply -f sample-resourcequota.yaml

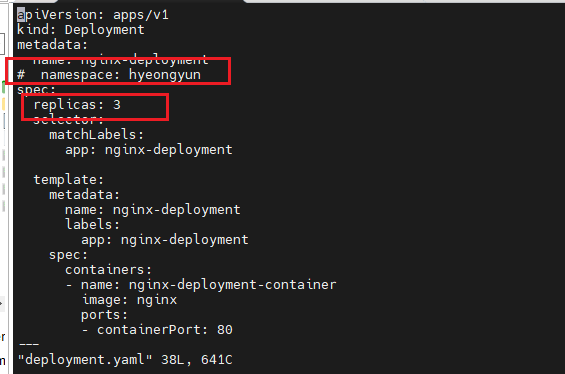

### vi deployment.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: nginx-deployment

spec:

replicas: 3

selector:

matchLabels:

app: nginx-deployment

template:

metadata:

name: nginx-deployment

labels:

app: nginx-deployment

spec:

containers:

- name: nginx-deployment-container

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: loadbalancer-deployment

spec:

type: LoadBalancer

# externalIPs:

# - 192.168.0.143

selector:

app: nginx-deployment

ports:

- protocol: TCP

port: 80

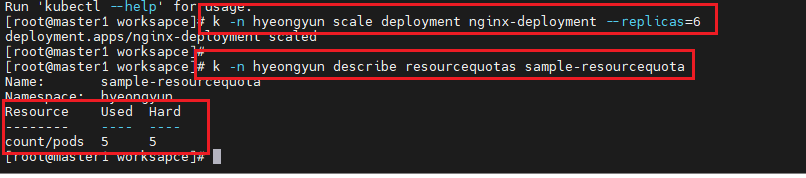

targetPort: 80# k apply -n hyeongyun -f deployment.yaml

- k -n hyeongyun describe resourcequotas sample-resourcequota : 세부적인 확인

# k -n hyeongyun scale deployment nginx-deployment --replicas=6

- scaleOut 결과 scale이 ResourceQuota의 설정에 의해 늘어나지 않는다.

# k delete -f deployment.yaml -> 파일명으로도 리소스들 삭제 가능

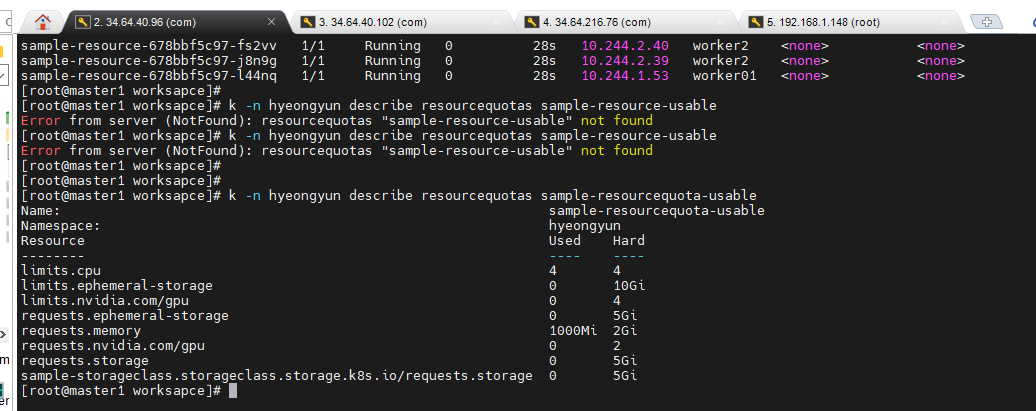

### vi sample-resourcequota-usable.yaml

apiVersion: v1

kind: ResourceQuota

metadata:

name: sample-resourcequota-usable

namespace: hyeongyun # 네임스페이스 지정

spec:

hard:

# 네임스페이스의 모든 파드에 대해, 각 컨테이너에는 메모리 요청량(request), 메모리 상한(limit), CPU 요청량 및 CPU 상한이 있어야 한다.

requests.memory: 2Gi

requests.storage: 5Gi

sample-storageclass.storageclass.storage.k8s.io/requests.storage: 5Gi

requests.ephemeral-storage: 5Gi

requests.nvidia.com/gpu: 2

limits.cpu: 4

limits.ephemeral-storage: 10Gi

limits.nvidia.com/gpu: 4# k -n hyeongyun describe resourcequotas sample-resourcequota-usable

### vi sample-pod.yaml

apiVersion: v1

kind: Pod

metadata:

name: sample-pod

namespace: hyeongyun

spec:

containers:

- name: nginx-container

image: nginx:1.16

- 제한 사항을 지정하지 않았기 때문에 오류가 발생

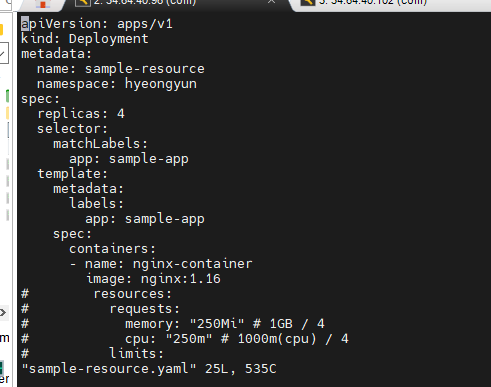

### vi sample-resource.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: sample-resource

namespace: hyeongyun

spec:

replicas: 4

selector:

matchLabels:

app: sample-app

template:

metadata:

labels:

app: sample-app

spec:

containers:

- name: nginx-container

image: nginx:1.16

resources:

requests:

memory: "250Mi" # 1GB / 4

cpu: "250m" # 1000m(cpu) / 4

limits:

memory: "500Mi" # 2GB / 4

cpu: "1000m" # 4000(cpu) / 4

- k -n hyeongyun describe resourcequotas sample-resourcequota-usable

- 자원 확인

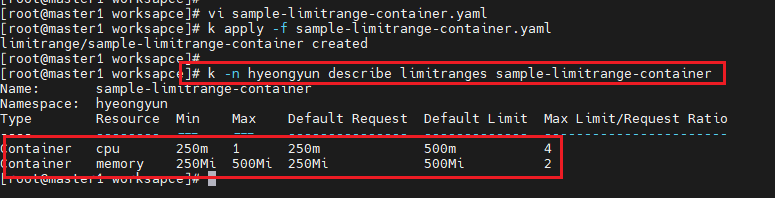

## LimitRange

### vi sample-limitrange-container.yaml

apiVersion: v1

kind: LimitRange

metadata:

name: sample-limitrange-container

namespace: hyeongyun

spec:

limits:

- type: Container

default:

memory: 500Mi

cpu: 500m

defaultRequest:

memory: 250Mi

cpu: 250m

max:

memory: 500Mi

cpu: 1000m

min:

memory: 250Mi

cpu: 250m

maxLimitRequestRatio:

memory: 2

cpu: 4- k apply -f sample-limitrange-container.yaml

- k -n hyeongyun describe limitranges sample-limitrange-container

- sample-resource.yaml에서 제한 사항 지우고 다시 apply

# 스케쥴

@@ 파드 스케줄(자동 배치)

# vi pod-schedule.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-schedule-metadata

labels:

app: pod-schedule-labels

spec:

containers:

- name: pod-schedule-containers

image: nginx

ports:

- containerPort: 80

---

apiVersion: v1

kind: Service

metadata:

name: pod-schedule-service

spec:

type: NodePort

selector:

app: pod-schedule-labels

ports:

- protocol: TCP

port: 80

targetPort: 80- k get pod -o wide

- 파드가 노드들 중 하나에 자동으로 생긴다.

@@ 파드 노드네임(수동 배치)

# vi pod-nodename.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodename-metadata

labels:

app: pod-nodename-labels

spec:

containers:

- name: pod-nodename-containers

image: nginx

ports:

- containerPort: 80

nodeName: worker2 # 노드 선택

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodename-service

spec:

type: NodePort

selector:

app: pod-nodename-labels

ports:

- protocol: TCP

port: 80

targetPort: 80- k get pod -o wide

- 원하는 노드에 파드를 만든다.( worker2)

# k kubectl get po --selector=app=pod-nodename-labels -o wide --show-labels : selector로 pod 검색 label도 함께 검색할 수 있다.

### kubectl label nodes worker2 tier=dev # node에 label 부여

# vi pod-nodeselector.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-nodeselector-metadata

labels:

app: pod-nodeselector-labels

spec:

containers:

- name: pod-nodeselector-containers

image: nginx

ports:

- containerPort: 80

nodeSelector:

tier: dev

---

apiVersion: v1

kind: Service

metadata:

name: pod-nodeselector-service

spec:

type: NodePort

selector:

app: pod-nodeselector-labels

ports:

- protocol: TCP

port: 80

targetPort: 80# kubectl label nodes worker2 tier- : label 지우기

# kubectl get nodes --show-labels

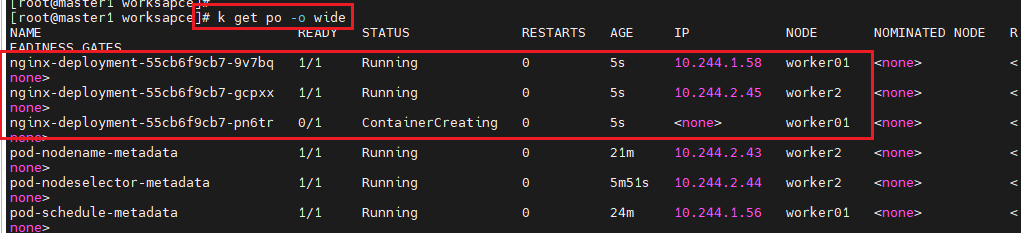

- deployment.yaml 파일 수정 후 apply

- pod 생성 확인

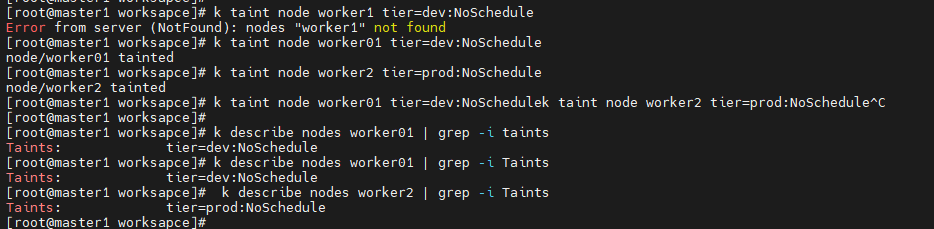

@@ taint와 toleration

# k taint node worker01 tier=dev:NoSchedule

# k taint node worker2 tier=prod:NoSchedule

# k describe nodes worker01 | grep -i Taints - taint 확인

# k describe nodes worker2 | grep -i Taints

### vi pod-taint.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata

labels:

app: pod-taint-labels

spec:

containers:

- name: pod-taint-containers

image: nginx

ports:

- containerPort: 80

tolerations:

- key: "tier"

operator: "Equal"

value: "dev"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service

spec:

type: NodePort

selector:

app: pod-taint-labels

ports:

- protocol: TCP

port: 80

targetPort: 80# k apply -f pod-taint.yaml

# cp pod-taint.yaml pod-taint2.yaml

### vi pod-taint2.yaml

apiVersion: v1

kind: Pod

metadata:

name: pod-taint-metadata2

labels:

app: pod-taint-labels2

spec:

containers:

- name: pod-taint-containers2

image: nginx

ports:

- containerPort: 80

tolerations:

- key: "tier"

operator: "Equal"

value: "prod"

effect: "NoSchedule"

---

apiVersion: v1

kind: Service

metadata:

name: pod-taint-service2

spec:

type: NodePort

selector:

app: pod-taint-labels2

ports:

- protocol: TCP

port: 80

targetPort: 80# k apply -f pod-taint2.yaml

# k get pod -o wide

# k taint node worker01 tier=dev:NoSchedule-

# k taint node worker2 tier=dev:NoSchedule-

-> taint 해제

@@ 커든과 드레인(커든: 노드에 추가로 파드를 스케줄링하지 않도록 함. 드레인: 노드 관리를 위해 파드를 다른 노드로 이동)

## 커든

- 커든 명령은 지정된 노드에 추가로 파드를 스케줄하지 않는다.

- kubectl cordon worker2

- kubectl get no

- kubectl get po -o wide # 노드 1 파드만 스케줄

- 파드가 worker2 노드에 생성되지 않는다.

- kubectl uncordon worker2 : 커든 해제

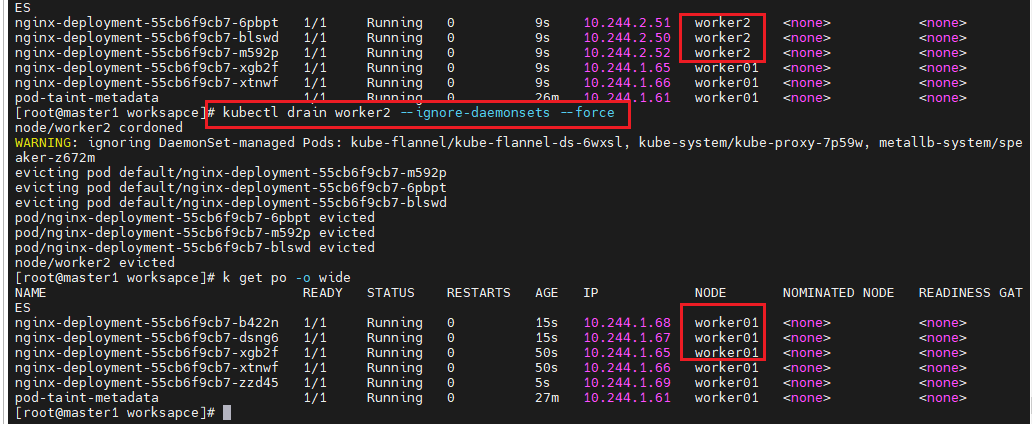

## 드레인 (replicaset와 deployment 같은 컨트롤러는 replica 갯수에 맞지 않으면 다른 노드에 파드를 옮긴다.)

- 따라서 드레인은 파드를 단독으로 생성할 때 보다는 replicaset이나 deployment를 만들 때 사용하는게 좋다.

- 드레인 명령은 지정된 노드에 모든 파드를 다른 노드로 이동시킨다.(사실은 다른 노드에 pod를 다시 만듬;replicaset와 deployment)

- 자동으로 node를 지정한 경우만 가능

- 수동으로 node를 지정한 경우는 파드가 없어진다.

kubectl drain worker2 --ignore-daemonsets --force

kubectl get po -o wide

kubectl get no

- kubectl uncordon worker2

- kubectl get no

# 프로메테우스

# kubectl create ns monitoring

- 모니터링 전용 namespace 생성

git clone https://github.com/hali-linux/my-prometheus-grafana.git

cd my-prometheus-grafana

kubectl apply -f prometheus-cluster-role.yaml

kubectl apply -f prometheus-config-map.yaml

kubectl apply -f prometheus-deployment.yaml

kubectl apply -f prometheus-node-exporter.yaml

kubectl apply -f prometheus-svc.yaml

kubectl get pod -n monitoring

kubectl get pod -n monitoring -o wide

kubectl apply -f kube-state-cluster-role.yaml

kubectl apply -f kube-state-deployment.yaml

kubectl apply -f kube-state-svcaccount.yaml

kubectl apply -f kube-state-svc.yaml

kubectl get pod -n kube-system # 프로메테우스는 시스템 파드- 프로메테우스 설치

- 파드가 위치한 node의 public IP의 30003 포트로 접근

--- 그라파나 설치

kubectl apply -f grafana.yaml

kubectl get pod -n monitoring

- 설치 후 30004번 포트로 접근

https://hyeongyun.tistory.com/entry/Kubernetes5

- 이 링크에서 그라파나 설정 참고

'Cloud Solution Architect > 쿠버네티스' 카테고리의 다른 글

| Kubernetes5 (0) | 2023.06.07 |

|---|---|

| Kubernetest4 (0) | 2023.06.05 |

| Kubernetes3 (0) | 2023.06.01 |

| Kubernetes2 (0) | 2023.05.31 |

| Kubernetes1 (0) | 2023.05.30 |